.png)

- #Liquivid video improve custom profiles in folder for free#

- #Liquivid video improve custom profiles in folder install#

- #Liquivid video improve custom profiles in folder upgrade#

- #Liquivid video improve custom profiles in folder code#

- #Liquivid video improve custom profiles in folder download#

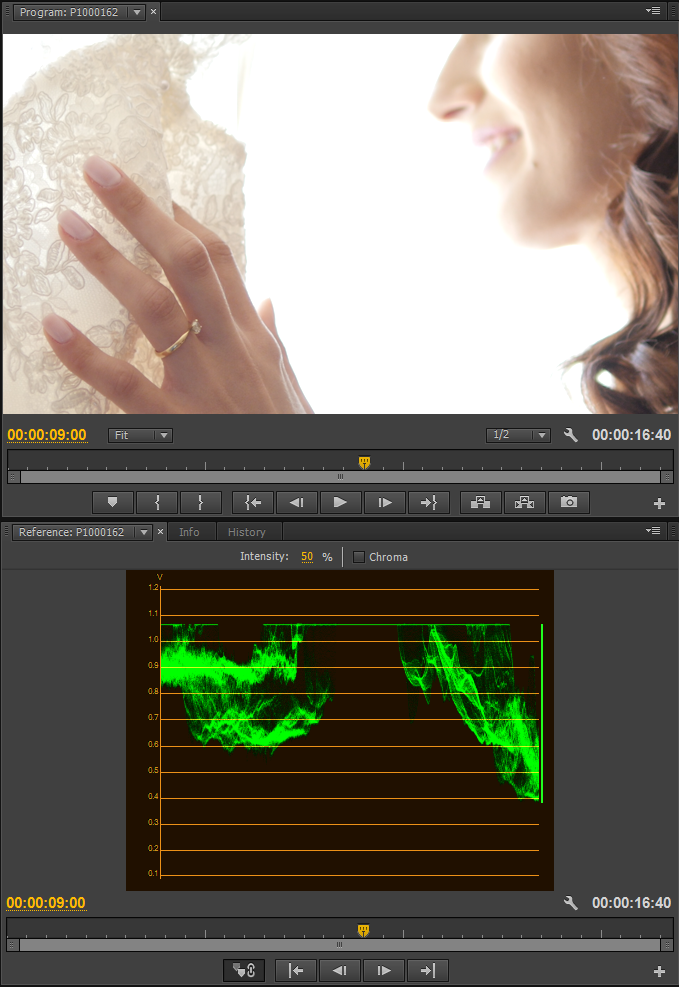

cache: cache images for faster trainingĭuring training, you want to be watching the to see how your detector is performing - see this post on breaking down mAP.

#Liquivid video improve custom profiles in folder download#

(Note: you can download weights from the Ultralytics Google Drive folder) weights: specify a custom path to weights.epochs: define the number of training epochs.To kick off training we running the training command with the following options: With our data.yaml and custom_yolov5s.yaml files ready, we can get started with training. Here is the YOLOv5 model configuration file, which we term custom_yolov5s.yaml: nc: 3 You can also edit the structure of the network in this step, though rarely will you need to do this. You have the option to pick from other YOLOv5 models including: For this tutorial, we chose the smallest, fastest base model of YOLOv5. Next we write a model configuration file for our custom object detector. Define YOLOv5 Model Configuration and Architecture yaml file called data.yaml specifying the location of a YOLOv5 images folder, a YOLOv5 labels folder, and information on our custom classes. Downloading a custom object dataset in YOLOv5 format #dataset = project.version("YOUR VERSION").download("yolov5")ĭownloading in Colab. #project = rf.workspace().project("YOUR PROJECT") #rf = Roboflow(api_key="YOUR API KEY HERE") ****Note you can now also download your data with the Roboflow PIP Package #from roboflow import Roboflow curl -L "" > roboflow.zip unzip roboflow.zip rm roboflow.zip

#Liquivid video improve custom profiles in folder code#

When prompted, select "Show Code Snippet." This will output a download curl script so you can easily port your data into Colab in the proper format. Then, click Generate and Download and you will be able to choose YOLOv5 PyTorch format. Once uploaded you can choose preprocessing and augmentation steps: The settings chosen for the BCCD example dataset

Once you have labeled data, to move your data into Roboflow, you can drag your dataset into the app in any format: ( VOC XML, COCO JSON, TensorFlow Object Detection CSV, etc). 1× Labeling images with Roboflow Annotate

#Liquivid video improve custom profiles in folder upgrade#

If your data is private, you can upgrade to a paid plan for export to use external training routines like this one or experiment with using Roboflow's internal training solution. To export your own data for this tutorial, sign up for Roboflow and make a public workspace, or make a new public workspace in your existing account. You can follow along with the public blood cell dataset or upload your own dataset. In the tutorial, we train YOLOv5 to detect cells in the blood stream with a public blood cell detection dataset. In this tutorial we will download object detection data in YOLOv5 format from Roboflow. Download Custom YOLOv5 Object Detection Data If you are attempting this tutorial on local, there may be additional steps to take to set up YOLOv5. Colab comes preinstalled with torch and cuda. The GPU will allow us to accelerate training time. Here is what we received: torch 1.5.0+cu101 _CudaDeviceProperties(name='Tesla P100-PCIE-16GB', major=6, minor=0, total_memory=16280MB, multi_processor_count=56) It is likely that you will receive a Tesla P100 GPU from Google Colab. Print('torch %s %s' % (torch._version_, _device_properties(0) if _available() else 'CPU')) import torchįrom IPython.display import Image # for displaying imagesįrom utils.google_utils import gdrive_download # for downloading models/datasets

#Liquivid video improve custom profiles in folder for free#

Then, we can take a look at our training environment provided to us for free from Google Colab.

#Liquivid video improve custom profiles in folder install#

!pip install -U -r yolov5/requirements.txt # install dependencies This will set up our programming environment to be ready to running object detection training and inference commands. To start off we first clone the YOLOv5 repository and install dependencies. We recommend following along concurrently in this YOLOv5 Colab Notebook. Export Saved YOLOv5 Weights for Future Inference.

Define YOLOv5 Model Configuration and Architecture.Download Custom YOLOv5 Object Detection Data.To train our detector we take the following steps: You can also use this tutorial on your own custom data. We use a public blood cell detection dataset, which you can export yourself. In this post, we will walk through how you can train YOLOv5 to recognize your custom objects for your use case. The YOLO family of object detection models grows ever stronger with the introduction of YOLOv5.

0 kommentar(er)

0 kommentar(er)